My homelab environment is my way of cost effectively trialling diferent types of tools and run it like a production service.

Getting a home environment go out to the wider internet nicely when ISP’s are expecting you to only consume content make this quite difficult.

I have some trouble getting port forwarding working with my new ISP who are using CGNAT and providing some router hardware that I can’t configure so all my attempts were failing even though I’ve been doing DDNS with this script for a while.

So researching how to do reverse proxy setups and SSL configuration was getting tedious I thought I’d try this Cloudflare system called argo tunnel

basically you point it at something running http/https and then it forwards traffic to cloudflare bypassing NAT and the need to setup TLS on your server because the tunnel software handles secure transmission to cloudflare and cloudflare provides TLS termination.

For multiple services you need multiple config files and systemd units. Systemd templates allow a single unit file define multiple units under an umbrella service

I found this helpful tip on the github issues page to define as many services via config files as I needed

You can also do the same process for SSH!

This is a very easy guideline for SSH via Access

I’m hosting this blog using AWS and s3 through cloudflare it’s a mix of technology that is useful for me to pick up right now because it’s matured and I like boring technology.

However the biggest issue I faced was inconsistient documentation from AWS relating to permissions so I’m going to note down what I’ve done to help anyone else wanting to do the same thing.

I followed the links here but made some changes that I’ll note along side them

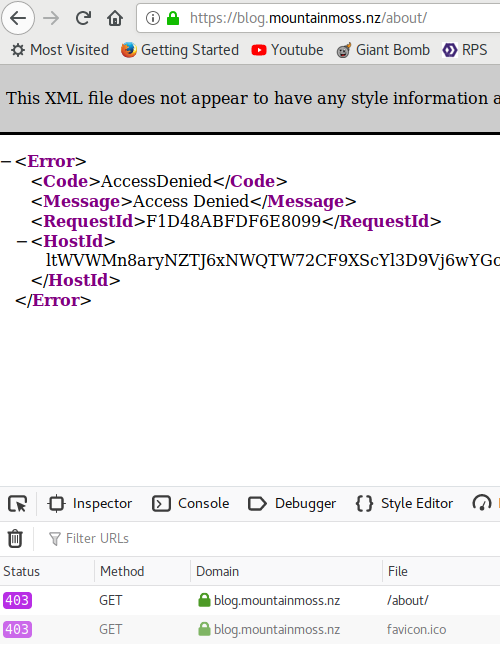

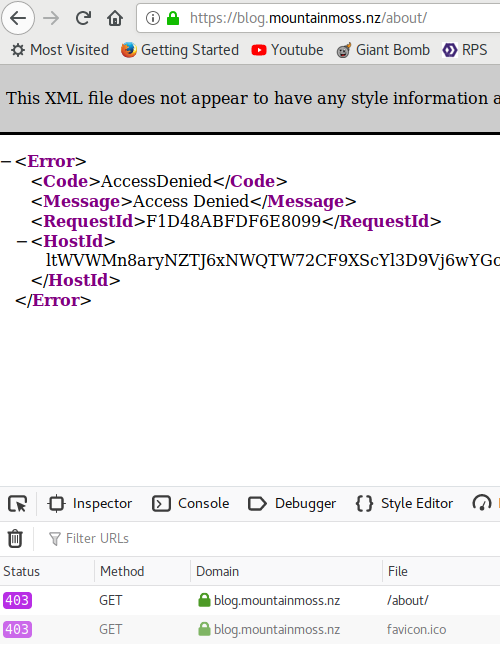

That was working for the homepage but I was getting persistient errors like this on everything else

So what I found eventually is that you need to grant the read priveledges to something called the S3CannonicalID from your cloudfront distribution which is mentioned in the troubleshooting docs

Here are the steps to correct the ACLs on your bucket objects (files).

Replace the sections in curly braces {{}} with the values from the output of your commands from your aws account

-

Get your cloudfront distribution ID

aws cloudfront list-distributions

-

Get the canonical user ID for the s3 bucket access

aws cloudfront get-cloud-front-origin-access-identity-config --id {{CLOUDFRONT_ID}}

-

Set permissions on the bucket for cloudfront

aws s3api put-bucket-acl --bucket {{YOUR_BUCKET}} --grant-full-control id={{YOUR_ACCOUNT_ID}} --grant-read id={{YOUR_S3_ID}} --grant-read-acp id={{YOUR_S3_ID}}

-

Set the permission on the object

aws s3api put-object-acl --bucket {{YOUR_BUCKET}} --key {{WEBSITE_FILE}} --grant-read id={{YOUR_S3_ID}}

That should get you access via the cloudfront URL. Or your public URL if you’ve configured the domain and SSL etc.

As I update the blog I’ll be using the grants syntax with the s3 cp comamnd to set all the oject ACLs when uploaded.

aws s3 cp --recursive {{WEBSITE_DIRECTORY}} s3://{{YOUR_BUCKET}}/ --grants full=id={{YOUR_ACCOUNT_ID}} read=id={{YOUR_S3_ID}} readacl={{YOUR_S3_ID}}

Hi There,

I’m working on a static site generator to note down all the stuff I’m learning in Web development.

Hopefully it’s useful enough as a record for other people because everything seems to be moving into private siloes run by a handful of companies and then isn’t able to be searched through for future users.

I wanted to use tumblr for posting my code musings but then it got bought by yahoo and all the drama afterwards shook my confidence.

I think i’ve used blogger 10 years ago but that wasn’t interesting in a technical sense so it felt like busywork.

Let’s see how this goes eh?